Enhancing Voice Access

Voice Access is a speech-based accessibility tool

for users with mobility challenges. This project expands its capabilities to support individuals with Tourette Syndrome.

Accessibility

Assistive Technology

Product Management

4 Months

4 Members

Figma, Miroboard

What is Voice Access

Voice Access is an accessibility feature in Windows that enables users to control and navigate their laptops/computers using voice commands, designed specifically to support individuals with mobility impairments, enhancing hands-free interaction and independence.

You tell voice access to wake up

You give voice access a command

It registers and processes the command

It executes - like opening a window

"If Voice Access can't understand, it shows an error"

How does it work?

Why Tourette Syndrome

86%

experience motor tics

36%

exhibit involuntary speech

They struggle with both

How voice access would process their commands

Understanding Challenges

Loss of Control

Users felt a lack of control over what the voice tool processed, often due to unintended commands.

Ambigious Feedback

They weren’t clearly informed when something went wrong, which led to confusion.

Lack of Customization

We noticed that every user had different types and intensities of tics, triggered by varying situations

We conducted a digital ethnography, analyzing over 20 data points from various online interactions. Through thematic analysis, we uncovered key patterns and insights that led us to the following conclusion:

How might we create a more adaptive voice access system that better understands diverse speech patterns, improves feedback, and supports user customization?

Brainstorming

With the problem statement framed, we mapped the Voice Access user journey for a user with Tourette Syndrome, to uncover specific accessibility gaps, cognitive load challenges, and moments of friction that impact usability and autonomy in voice-driven interactions.

Final Design

Feature 1

Modified EoU Detection

Voice Access currently uses pause-based detection to determine the end of a command, which can lead to delays or misinterpretation.

We’re introducing a Custom End-of-Utterance Command, allowing users to say phrases like “done” or “that’s it” to signal completion, giving them clearer control over input timing—similar to how Google Assistant handles closing phrases to prevent accidental triggers.

We’re introducing Speech Processing, powered by a federated LLM that learns from diverse TS speech patterns directly on the user’s device.

This preserves privacy while continuously adapting to individual communication styles. The feature is fully optional and user-controlled and can be enabled in settings, giving users autonomy over speech learning.

Feature 2

Adaptive Speech

Processing

Evaluation

Micro interaction delays could risk user frustration and drop-off.

1

Generic error messages can confuse users and hinder task recovery.

2

Harsh error visuals can harm user confidence and emotional tone.

3

Thanks for viewing

View other projects

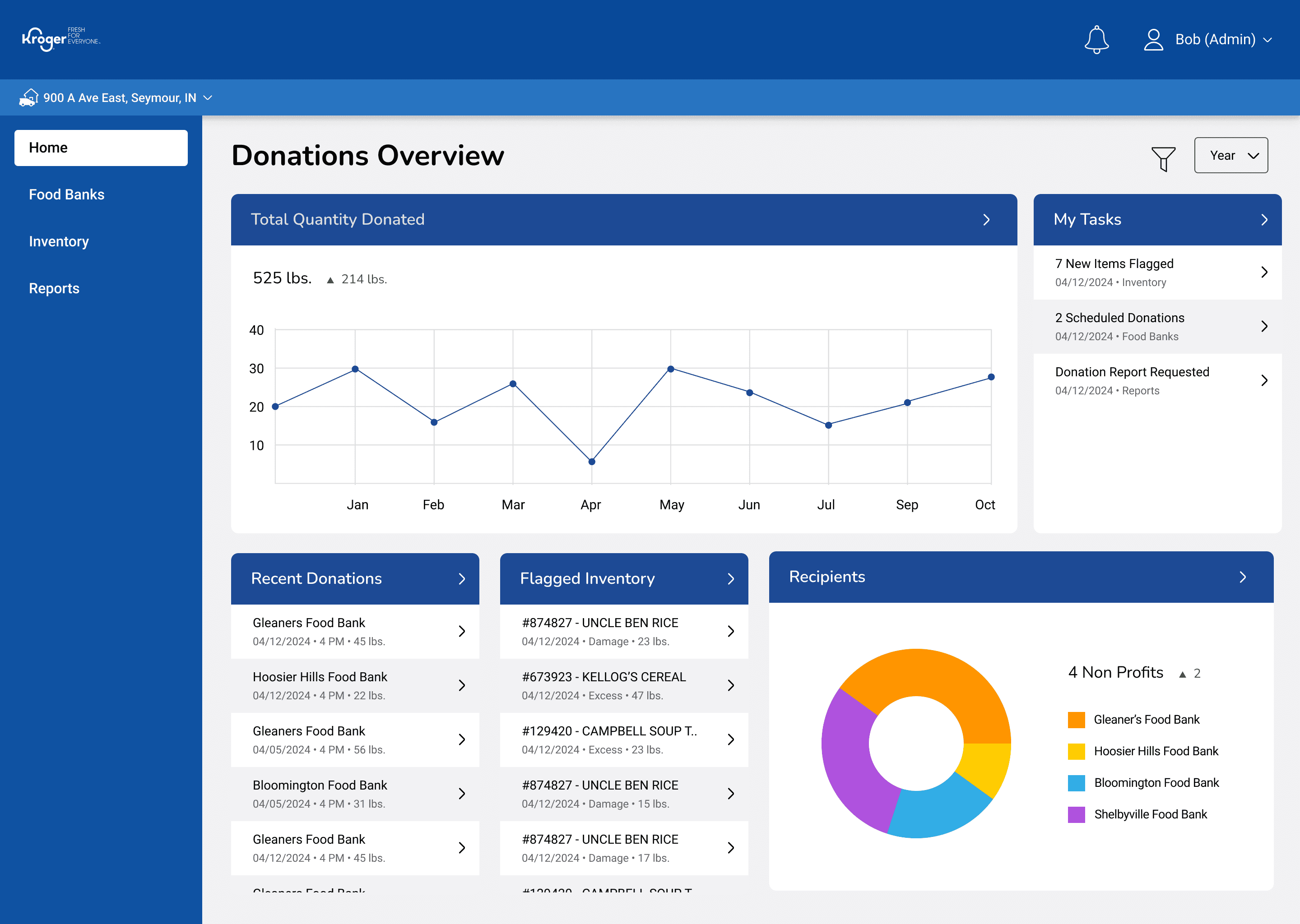

Streamlining Kroger's Food Donation Management

SUPPLY CHAIN | ECO HACKATHON

Optimized navigation and analytics for Jetsweat Fitness

B2B SAAS | DESIGN SYSTEM | SUMMER 24'

Shipped

Enhancing grocery

shopping with a Retail Buddy

USER EXPERIENCE | PROTOTYPING